Language NIMs

Overview

The LLM models hosted in the API catalog are packaged as a NVIDIA NIM microservices. Select models are available as downloadable container images with an NVIDIA AI Enterprise license entitlement. By downloading models from NVIDIA’s API catalog, enterprise developers can self-host the localized NIM, providing ownership of their model customizations, infrastructure choices, and full control of their IP and AI application.

Available Models

You can access the API reference for available models here

Select models are available as downloadable container images and supported with an NVIDIA AI Enterprise entitlement. These select models have additional OpenAI API spec details for running self-hosted localized NIMs.

LLM NIM containers include:

- Optimized LLM model

- APIs conforming to OpenAI spec

- Optimized inference engines

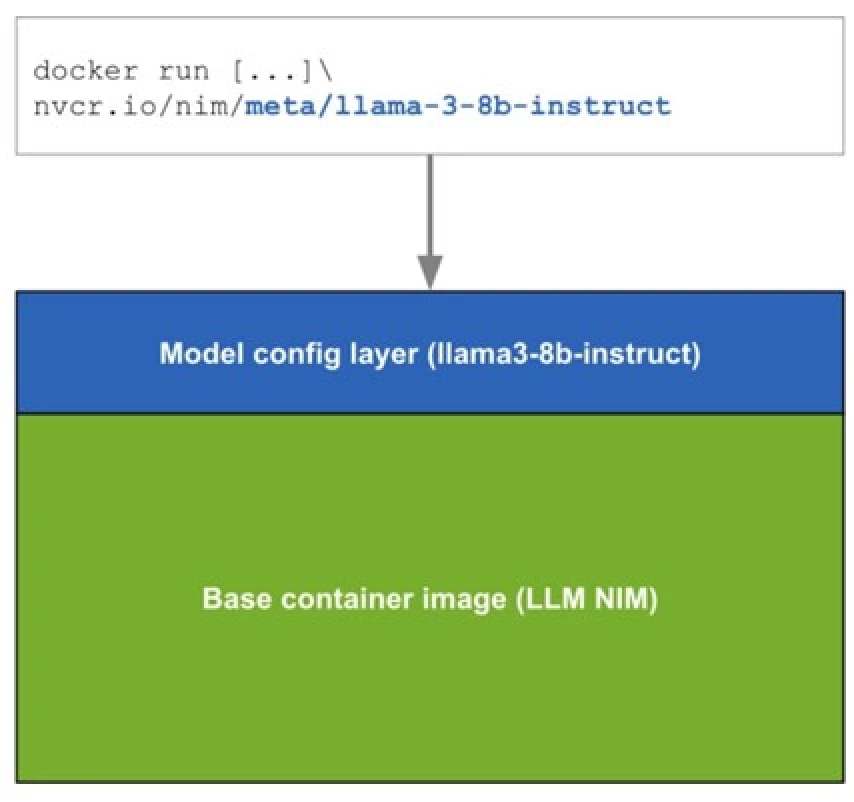

Downloadable NIMs are packaged as container images on a per model/model family basis. Each NIM is its own Docker container with a model, such as meta/llama3-8b-instruct. These containers include a runtime that runs on any NVIDIA GPU with sufficient GPU memory, but some model/GPU combinations are optimized. NIM automatically downloads the model from NGC, leveraging a local filesystem cache if available. Each NIM is built from a common base, so once a NIM has been downloaded, downloading additional NIMs is extremely fast.

When a NIM is first deployed, NIM inspects the local hardware configuration, and the available optimized model in the model registry, and then automatically chooses the best version of the model for the available hardware. For a subset of NVIDIA GPUs (see Support Matrix), NIM downloads the optimized TRT engine and runs an inference using the TRT-LLM library. For all other NVIDIA GPUs, NIM downloads a non-optimized model and runs it using the vLLM library.

NIMs are distributed as NGC container images through the NVIDIA Enterprise NGC Catalog. A security scan report is available for each container within the NGC catalog, which provides a security rating of that image, breakdown of CVE severity by package, and links to detailed information on CVEs.

Updated about 1 year ago