API Catalog Quickstart Guide

Interacting with NIM through the API Endpoints

This Quickstart Guide shows you how to interact with a model that has been hosted as a NIM through a live API endpoint, both directly through a web-based UI and through Python code.

The NIM is hosted by NVIDIA on NVIDIA's own DGX Cloud environment.

NOTE: This guide uses the llama-3.1-8b-instruct model, but you can follow the same guide and use any model available in the NVIDIA API Catalog

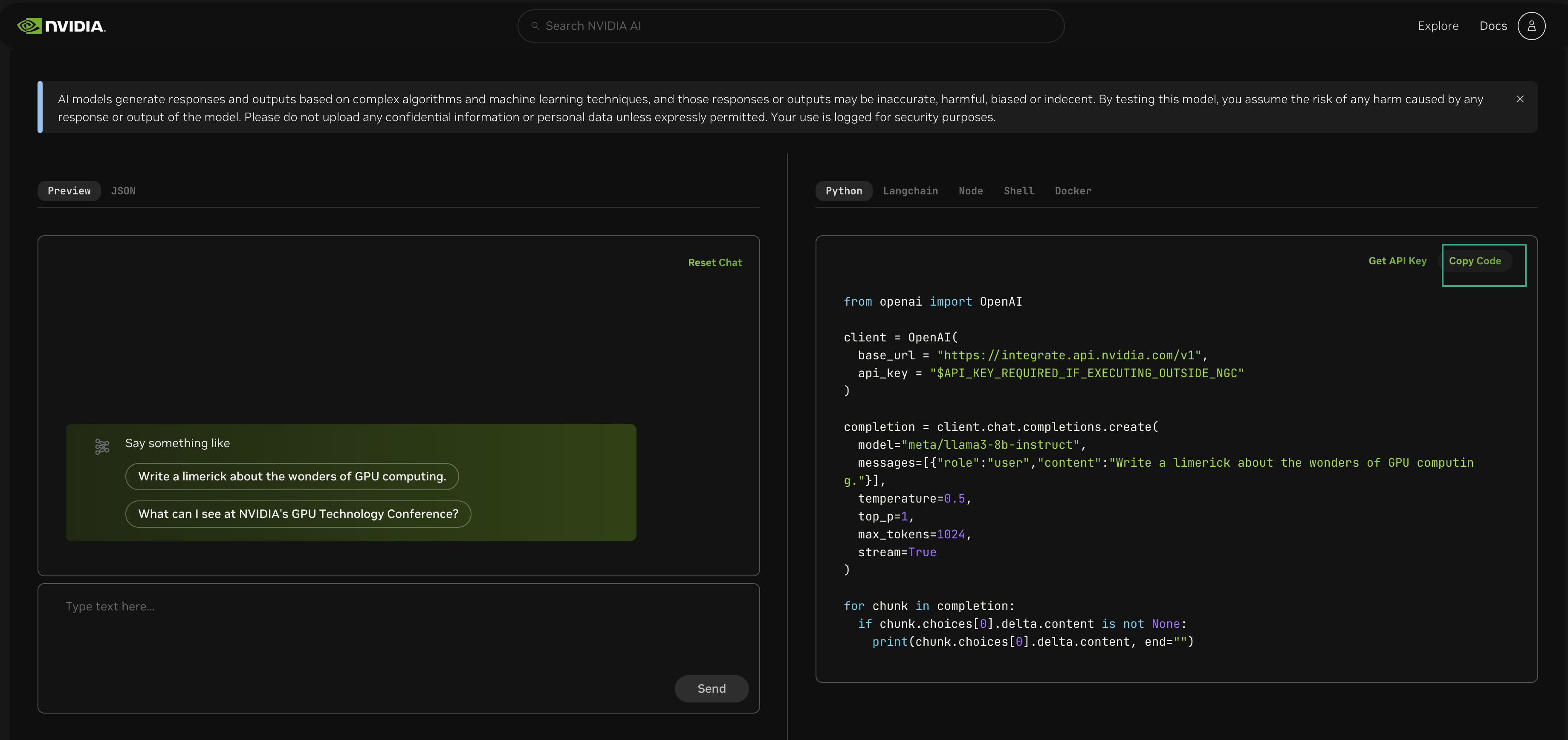

Interacting through the web based UI

The following instructions guide you through how to interact with the Llama-3.1-8B-instruct model API using a web-based UI.

-

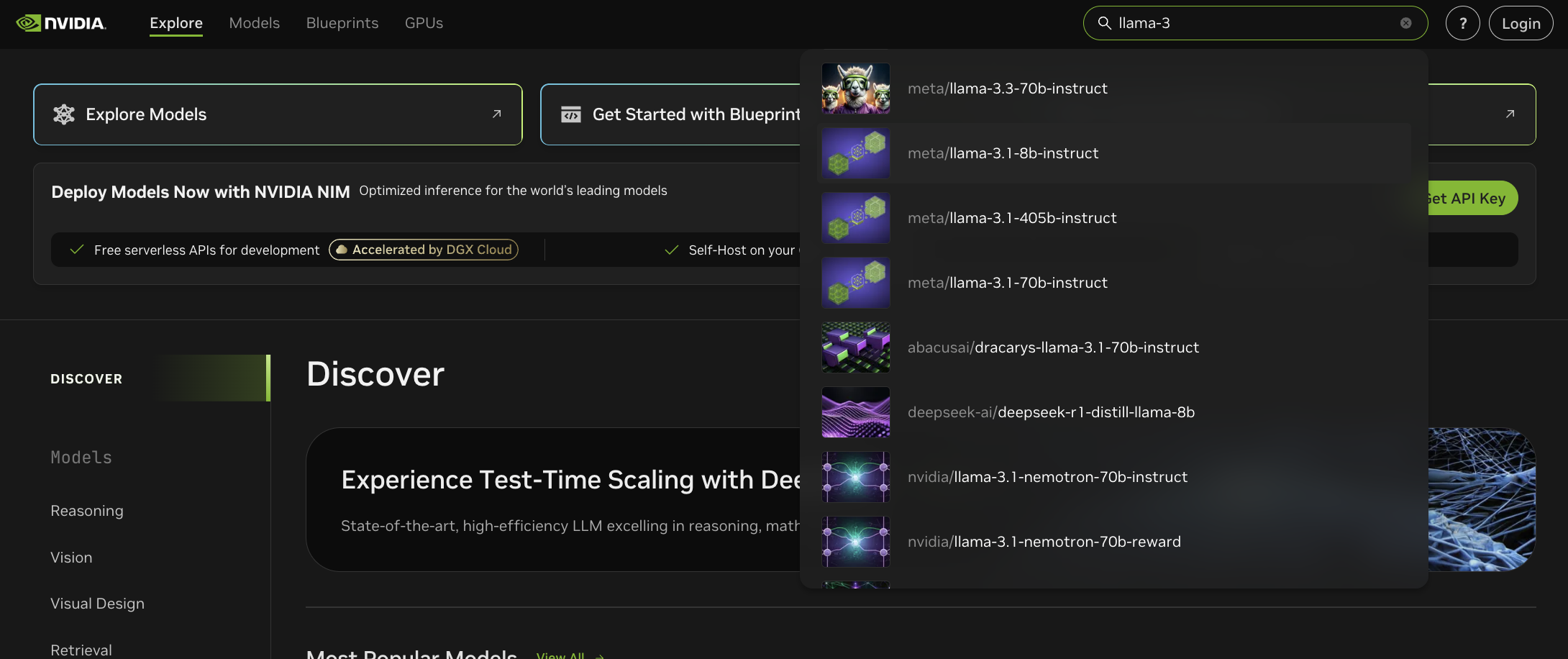

Visit build.NVIDIA.com on your web browser.

This webpage shows you the full catalog of NIM microservices. You can explore by Model type or Industry, or use the search bar to find a specific NIM. -

Within the search bar, type llama-3 and select the meta/llama-3.1-8B-instruct model.

This will take you to the model page. You may see a warning regarding the use of 3rd Party models. Click Acknowledge & Continue to proceed.

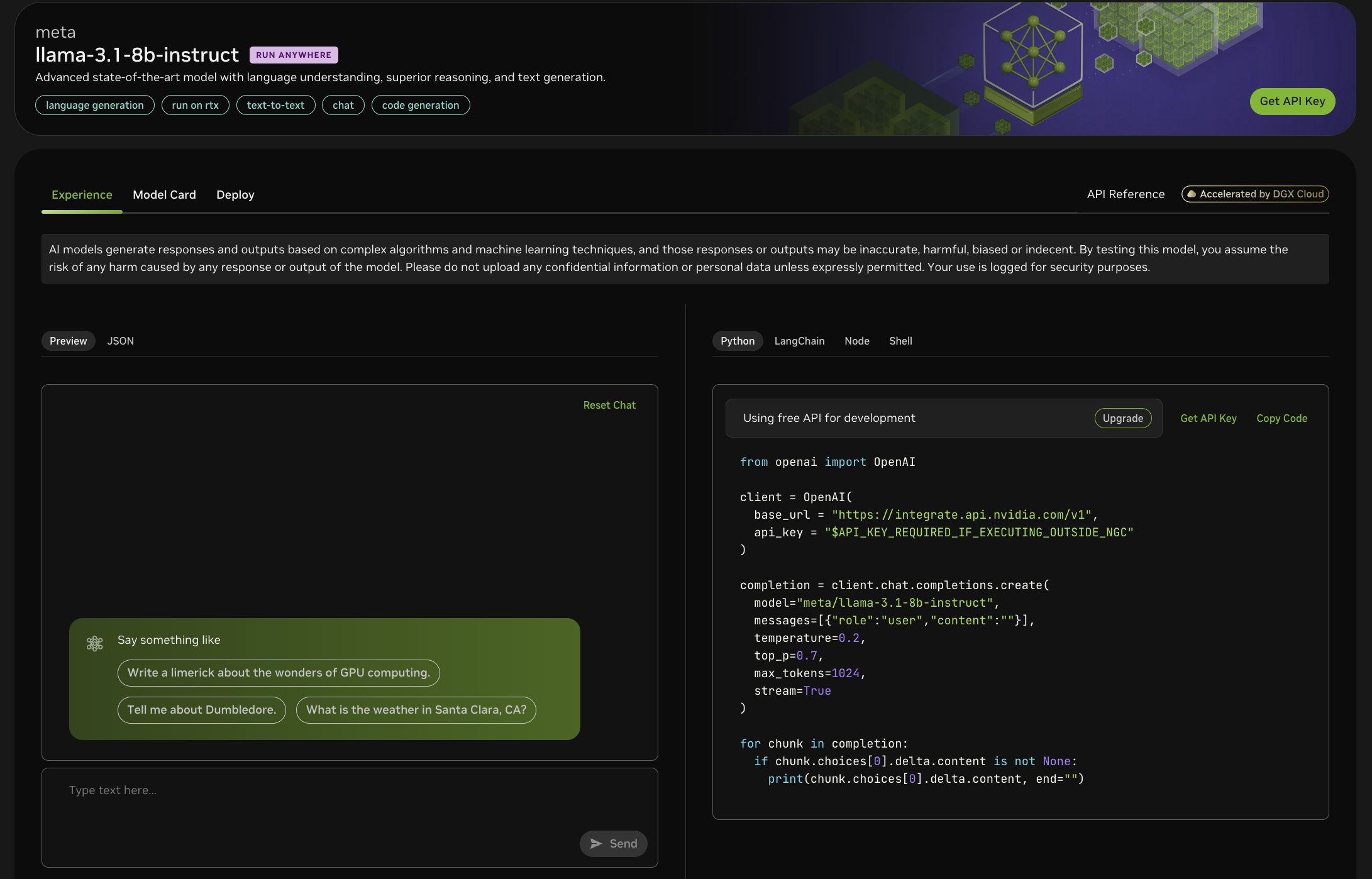

- In the Preview tab on the left of the page you can interact with the NIM through the web UI. Try clicking on one of the example questions, or write your own question where you are prompted to Type text here then click Send.

Your request will be sent to the model API which is hosted on NVIDIA DGX Cloud, and the model response will appear in the Preview box below your request.

Interacting through Python

You can also interact with the llama-3.1-8b-instruct model API endpoint using Python. Follow the instructions below to set up an API Key and send a request to a model.

If you already have an NVIDIA Developer API Key, skip to step 4.

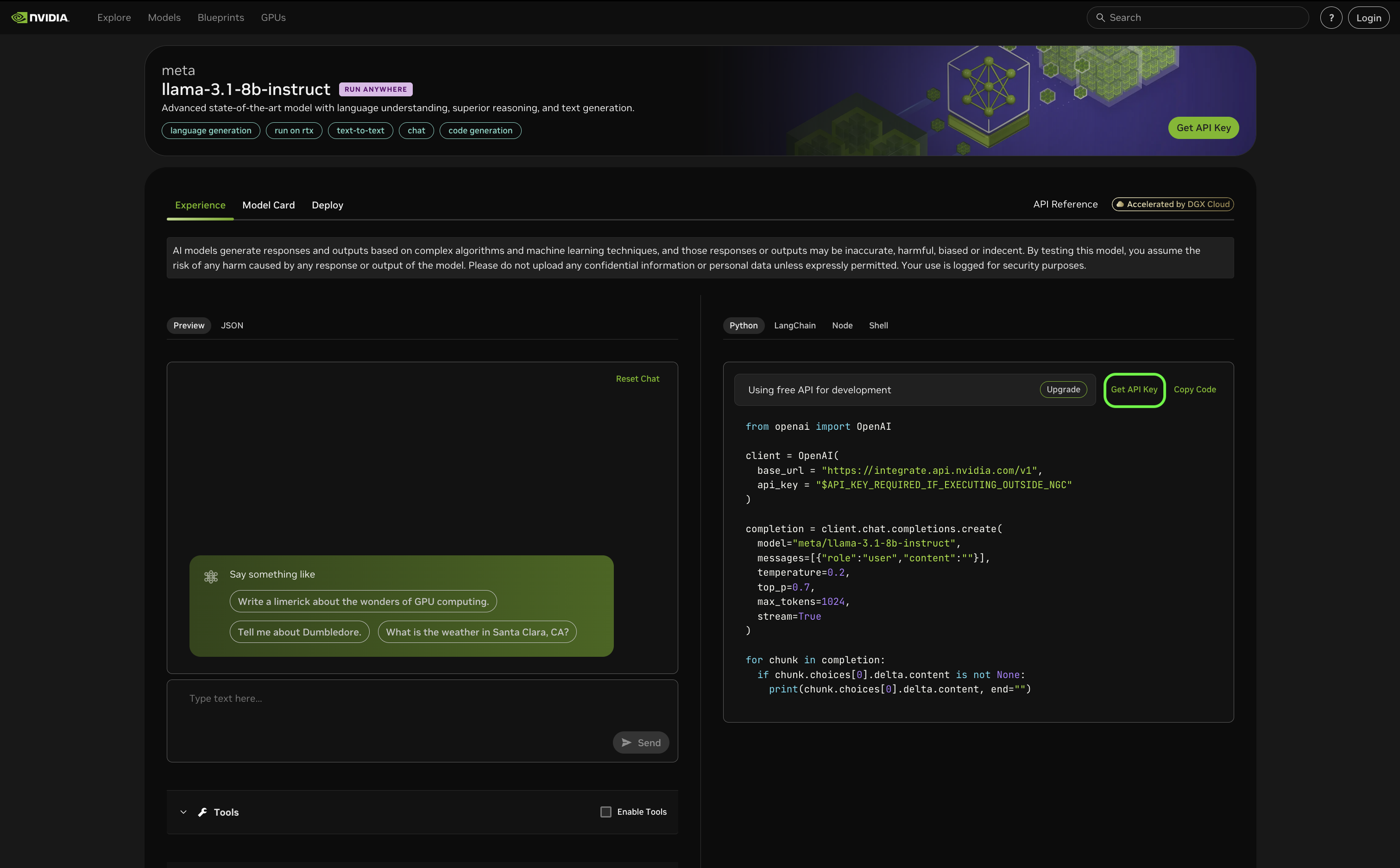

- In the right hand pane of the model page, click Get API Key.

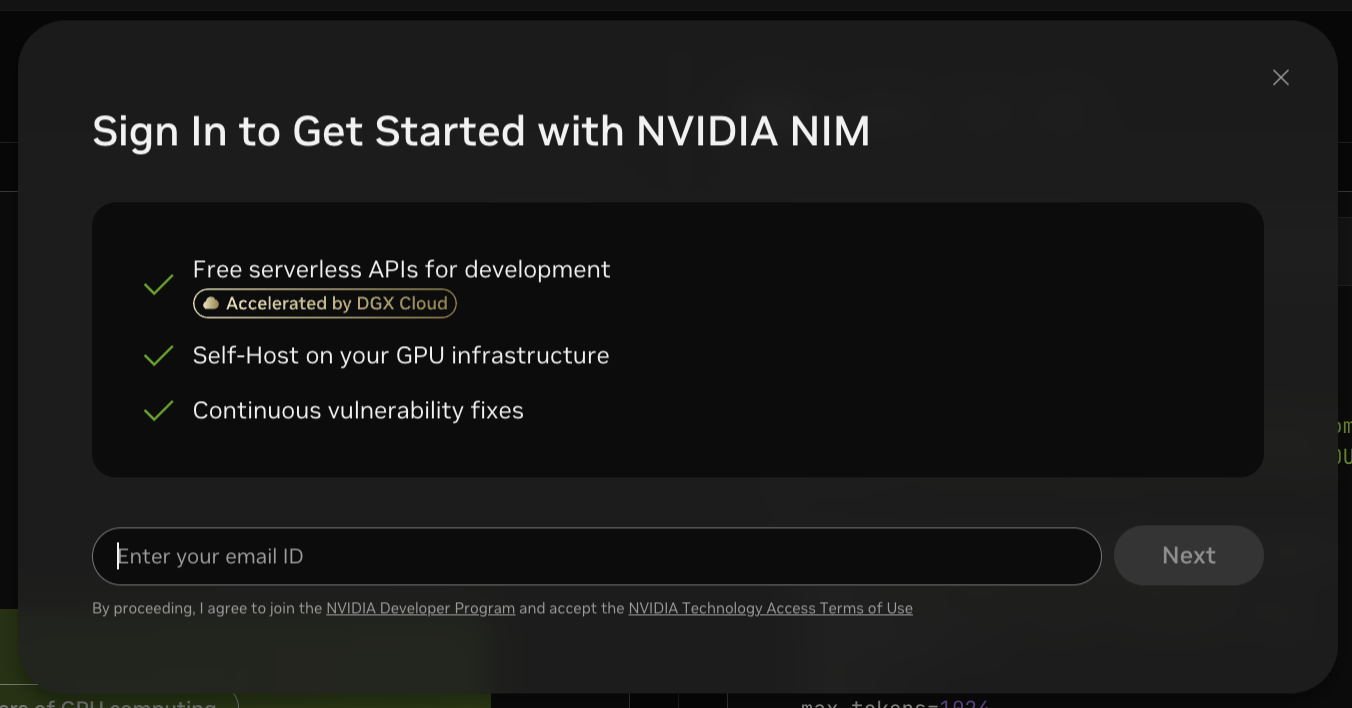

- Enter your email ID in the pop-up window, and click Next. By signing up, you will become a member of the Developer Program.

If you already have an NVIDIA Account, enter the email address associated with the account at this step. You will then be prompted to sign in.

If you do not already have an NVIDIA Account you will be prompted to set a Password. Follow the on-screen instructions to set up the account.

- Once account set up has been completed, or you have logged in as a returning user, you will be redirected back to the model page. A pop up window will show your new API Key. Click Copy Key. Be sure to paste your key somewhere safe.

- Click the Copy Code button to copy the Python code given in the pane on the right. Paste that code into a Python environment or text editor.

- Within the code, replace

"API_KEY_REQUIRED_IF_EXECUTING_OUTSIDE_NGC"with your API key. - You can now run the code. Replace

"Write a limerick about the wonders of GPU computing."with your own request to send to the model.

Updated 11 months ago